Agentic AI in 10 Questions

- Meltem Seyhan

- Jun 26, 2025

- 4 min read

As someone who learns best by asking questions and getting clear, simple answers, I decided to explore Agentic AI the same way. In this post, I’ve broken down what I’ve learned into 10 straightforward questions and answers—designed to help you grasp one of the most talked-about topics in AI today: Agentic AI.

What does “Agentic AI” mean?

Agentic AI refers to artificial intelligence systems that can make autonomous decisions, take actions toward defined goals, and operate with a degree of independence. Unlike traditional AI, which responds to inputs passively, agentic AI actively plans, reasons, and interacts with its environment to achieve objectives.

How is an agent different from a typical LLM app using tools and RAG?

A program using an LLM with tools and RAG typically follows a fixed workflow: it retrieves data, runs the LLM, and outputs a result. An agent, on the other hand, can reason, make decisions, and adapt its actions dynamically. Agents often loop through planning, acting, and observing—deciding what to do next based on outcomes, not just executing a pre-defined pipeline.

Why do we need frameworks like LangChain, LangGraph, or Google ADK? What problems do they solve?

Tools like LangChain, LangGraph, and Google ADK help manage the complexity of building agentic systems. They provide solutions for:

Tool orchestration: Connecting LLMs with external tools (APIs, databases, etc.).

Memory management: Letting agents recall past interactions.

Reasoning and planning: Structuring multi-step tasks.

Control flow: Handling branching logic, loops, and retries.

Observability: Debugging and monitoring agent behavior.

These frameworks solve the repetitive plumbing so developers can focus on designing smart behaviors.

When and why should we use multi-agent systems?

We need multi-agent systems when a single agent can’t handle all tasks effectively due to complexity, specialization, or scalability. They’re useful when:

Tasks require different skills (e.g., researcher vs. coder).

Workload can be parallelized for speed.

Coordination or negotiation between roles is needed.

Modular design makes the system easier to build, test, and maintain.

Multi-agent setups like swarm and supervisor help solve the scaling and performance issues that single-agent systems encounter—especially when handling messy, real-world workloads across multiple domains.

What are the common architectures in agentic systems?

Single Agent systems involve one LLM agent that has access to all tools and instruction contexts. This setup works well in simple scenarios, but its performance drops significantly when more “distractor” domains are introduced—both in terms of effectiveness and token usage.

Swarm architectures use a group of specialized sub-agents, where any agent can become active and respond directly to the user. This setup tends to offer higher accuracy than the supervisor model and scales more efficiently in terms of cost. However, it requires each agent to be aware of all others and be capable of interacting directly with the user, which adds complexity.

Supervisor architectures rely on a central coordinator agent that delegates tasks to sub-agents and relays their responses back to the user. Only the supervisor interacts with the user. This model is highly flexible and makes it easy to plug in external agents

Learn more in this benchmark post on agent architectures.

What is the A2A (Agent-to-Agent) Protocol?

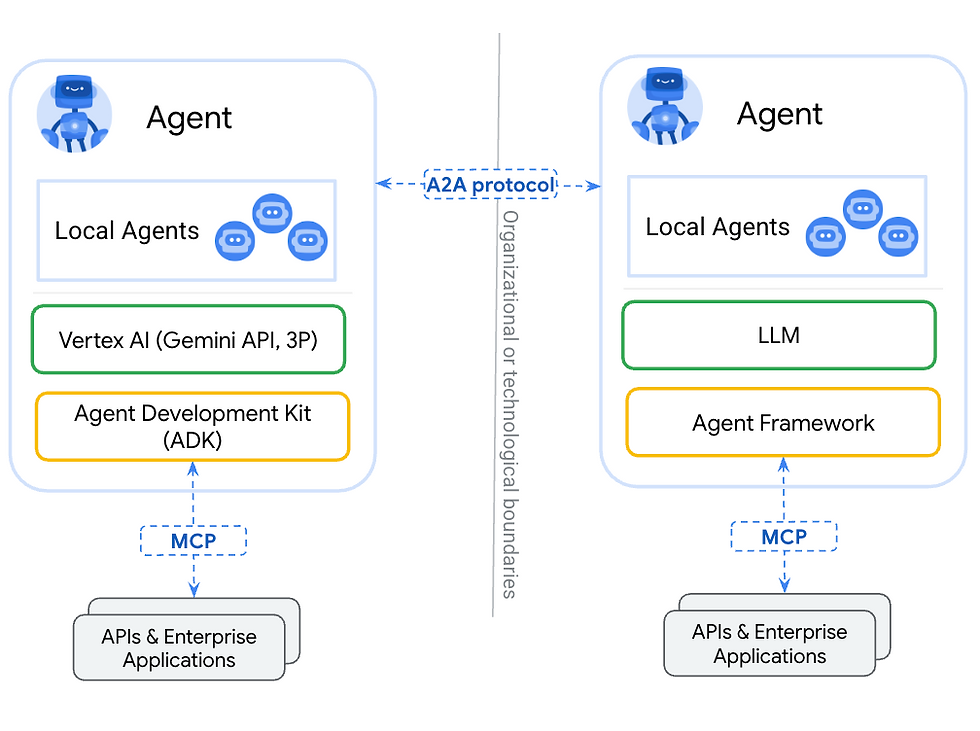

A2A Protocol (Agent-to-Agent Protocol) is an open Google-backed communication standard that defines how autonomous agents interact and share information with each other. It ensures agents can:

Exchange messages in a structured, understandable way

Negotiate, coordinate, or delegate tasks

Maintain context and identity during multi-step interactions

A2A is commonly used in multi-agent systems, especially in decentralized or interoperable setups. It helps agents from different organizations or platforms work together securely and reliably.

You can dive deeper into the A2A Protocol here.

Do I need A2A for my own multi-agent system?

No, you don’t need to use A2A unless your agents need to communicate across different systems, teams, or organizations. If you’re building a closed, in-house multi-agent system—especially within the same platform or framework—agents can often interact directly as sub-agents, be used as tools (“agent as a tool”), or communicate through internal routing mechanisms (like LangGraph-style execution flows). Additionally, if agents need access to external tools or data sources, this can be handled via the MCP protocol, which offers a standardized and extensible way to connect models with context. In short, A2A is useful for distributed, cross-platform agent networks, but simpler architectures often don’t need it.

What is MCP (Model Context Protocol)?

Model Context Protocol (MCP) is an Anthropic-led open standard for connecting a single agent/model to external tools and data. It defines a universal way for AI applications to connect with external data sources, tools, and services. You can dive deeper into MCP spec here.

How are A2A and MCP different?

A2A (Agent-to-Agent) is a protocol for autonomous agents to discover each other, exchange tasks, and collaborate—ideal for multi-agent workflows. MCP (Model Context Protocol) standardizes how a single agent connects to external tools and data sources, making it easy to plug in context like files, APIs, or databases; they are complementary and often used together in complex agentic systems.

What’s next for Agentic AI?

Agentic AI is still in its early stages, but rapid progress is being made in planning, memory, and tool integration. As LLMs get better at reasoning and coordinating across tasks, we can expect more reliable, real-world agent applications—from AI assistants that manage your calendar to autonomous research agents. The biggest opportunities now lie in improving evaluation, trust, and collaboration between agents. It’s a fast-evolving space—and we’re just scratching the surface.

I’d love to hear from you—which topic should I explore in my next post? Multi-agent orchestration, real-world use cases, agent memory design, or something else? Drop a comment or message me—let’s keep learning together.

Comments